I suspect that many of us, as computer users, have had the experience of searching for some computer file that we know we saved somewhere, but can’t seem to find. Even more frustrating is the situation where, having spent time looking for the file and having found it, we discover either that the file has been corrupted, or is in a format that our software can no longer read. This is perhaps most likely to happen with digital photographs or videos, but it can also happen with text files, or even programs themselves. This is the problem of Digital Obsolescence.

I suspect that many of us, as computer users, have had the experience of searching for some computer file that we know we saved somewhere, but can’t seem to find. Even more frustrating is the situation where, having spent time looking for the file and having found it, we discover either that the file has been corrupted, or is in a format that our software can no longer read. This is perhaps most likely to happen with digital photographs or videos, but it can also happen with text files, or even programs themselves. This is the problem of Digital Obsolescence.

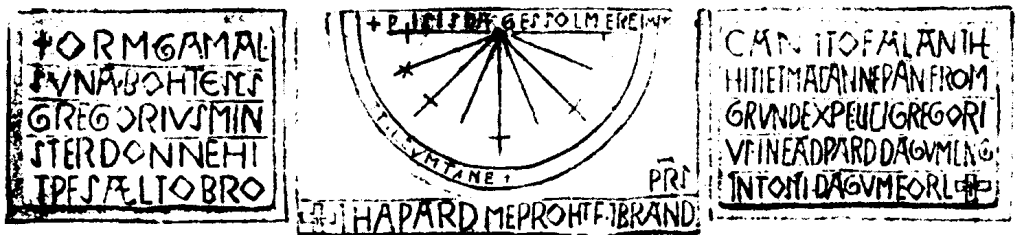

In an earlier post, I mentioned a vector graphics file format called SVG, and I showed how you can use a text editor to open SVG files and view the individual drawing instructions in the file. I didn’t discuss the reason why it’s possible to do that with SVG files, but not with some other file types. For example, if you try to open an older Microsoft Word file (with a .doc extension) with a text editor, all you’ll see are what appear to be reams of apparently random characters. Some file types, such as SVG, are “text encoded”, whereas other types, such as Word .doc files, are “binary encoded”.

Within the computer industry, there has come to be an increasing acceptance of the desirability of using text-encoded file formats for many applications. The reason for this is the recognition of a serious problem, whereby data that has been stored in a particular binary format eventually becomes unreadable because software is no longer available to support that format. In some cases, the specification defining the data structure is no longer available, so the data can no longer be decoded.

The general problem is one of “data retention”, and it has several major aspects:

- Storing data on physical media that will remain accessible and readable for as long as required,

- Storing data in formats that will continue to be readable for as long as required.

- Where files are encrypted or otherwise secured, ensuring that passwords and keys are kept in some separate but secure location where they can be retrieved when necessary.

Most people who have used computers for a few years are aware of the first problem, as storage methods have evolved from magnetic tapes to optical disks, and so on. However, fewer people consider the second and third problems, which is what I want to discuss in this article.

Digital Obsolescence: The Cost of Storage and XML

In the early days of computers, device storage capacities were very low, and the memory itself was expensive. Thus, it was important to make the most efficient use of all available memory. For that reason, binary-encoded files tended to be preferred over text-encoded files, because binary encoding was generally more efficient.

However, those days are over, and immense quantities of memory are available very cheaply. Thus, even if text-encoding is less efficient than binary-encoding, that’s no longer a relevant concern in most cases.

Many modern text-encoding formats (including SVG and XHTML) are based on XML (eXtensible Markup Language). XML provides a basic structure for the creation of “self-describing data”. Such data can have a very wide range of applications, so, to support particular purposes, most XML files use document models, called Document Type Definitions (DTDs) or schemas. Many XML schemas have now been published, including, for example, Microsoft’s WordML, which is the schema that defines the structure of the content of newer Word files (those with a .docx extension).

XML is a huge subject in its own right, and many books have been written about it, even without considering the large number of schemas that have been created for it. I’ll have more to say about aspects of XML in future posts.

Digital Obsolescence: Long Term vs. Short Term Retention

Let’s be clear that the kind of “data retention” I’m talking about here refers to cases where you want to keep your data for the long term, and ensure that your files will still be readable or viewable many years in the future. For example, you may have a large collection of digital family photos, which you’d like your children to be able to view when they have grown up. Similarly, you may have a diary that you’ve been keeping for a long time, and you’ll want to be able to read your diary entries many years from now.

This is a very different problem from short-term data retention, which is a problem commonly faced by businesses. Businesses need to store all kinds of customer and financial information (and are legally required to do so in many cases), but the data only needs to be accessible for a limited period, such as a few years. Much of it becomes outdated very quickly in any case, so very old data is effectively useless.

There are some organizations out there who will be happy to sell you a “solution” to long-term data retention that’s actually useful only for short-term needs, so it’s important to be aware of this distinction.

Digital Obsolescence: Examples from my Personal Experience

In the early “pre Windows” days of DOS computers, several manufacturers created graphical user interfaces that could be launched from DOS. One of these was the “Graphical Environment Manager” (GEM), created by Digital Research. I began using GEM myself, largely because my employer at the time was using it. One facet of GEM was the “GEM Draw” program, which was (by modern standards) a very crude vector drawing program. I produced many diagrams and saved them in files with the .GEM extension.

A few years later, I wanted to reuse one of those GEM drawing files, but I’d switched to Windows, and GEM was neither installed on my computer nor even available to buy. I soon discovered that there was simply no way to open a GEM drawing file, so the content of those files had become “extinct”.

Similarly, during the 1990s, before high-quality digital cameras became available, I took many photographs on 35mm film, but had the negatives copied to Kodak Photo-CDs. The Photo-CD standard provided excellent digital versions of the photos (by contemporary standards), with each image stored in a PCD file in 5 resolutions. Again, years later, when I tried to open a PCD file with a recent version of Corel Draw, I discovered that the PCD format was no longer supported. Fortunately, in this case, I was able to use an older version of Corel Draw to batch-convert every PCD file to another more modern format, so I was able to save all my pictures.

Digital Obsolescence: Obsolete Data vs. Obsolete Media

As mentioned above, the problem I’m describing here doesn’t relate to the obsolescence of the media that contain the files you want to preserve. For example, there can’t be many operational computers still around that have working drive units for 5.25” floppy disks (or even 3.5” floppy disks), but those small disks were never particularly reliable storage media in any case, so presumably anyone who wanted to preserve files would have moved their data to more modern and robust devices anyway.

I’ll discuss some aspects of media obsolescence further in a future post.

Digital Obsolescence: Survival Practices

So what can you do to ensure that your data won’t go extinct? There are several “best practices”, but unfortunately some of these involve some form of tradeoff, whereby you trade data survivability for sophisticated formatting features.

- Never rely on “cloud” storage for the long term. Cloud storage is very convenient for short-term data retention, or to make data available from multiple locations, but it’s a terrible idea for long-term retention. All kinds of bad things could happen to your data over long periods of time: the company hosting the data could have its servers hacked, or it could go out of business, or else you could simply forget where you stored the data, or the passwords you need to access it!

- Prefer open data formats to proprietary formats.

- Prefer XML-based formats to binary formats.

- Try to avoid saving data in encrypted or password-protected forms. If it must be stored securely, ensure that all passwords and encryption keys exist in written form, and that you’ll be able to access that information when you need it! (That is, ensure that the format of the key storage file won’t itself become extinct.)

- Expect formats to become obsolete, requiring you to convert files to newer formats every few years.

- Copy all the files to new media every few years, and try opening some of the copied files when you do this. This reduces the danger that the media will become unreadable, either because of corruption or because physical readers are no longer available.

Sometimes you’ll see recommendations for more drastic formatting restrictions, such as storing text files as plain-text only. Personally, I don’t recommend following such practices, unless the data content is extremely critical, and you can live within the restrictions. If you follow the rules above consistently, you should be relatively safe from “data extinction”.